Predictive AI in E-commerce: Ethical Case Studies

Explore the ethical implications of predictive AI in e-commerce, focusing on transparency, data privacy, and fairness to build customer trust.

Predictive AI is reshaping e-commerce, but its use comes with ethical challenges. While it powers personalized recommendations, fraud detection, and inventory management, it also raises concerns about data privacy, bias, and transparency. Companies like Amazon and Target have faced scrutiny over how AI systems handle sensitive customer data, leading to critical lessons about responsible practices.

Key takeaways:

- How it works: Predictive AI analyzes data like browsing history and purchase patterns to forecast customer behavior and trends.

- Ethical concerns: Misuse of data, lack of transparency, and bias in algorithms can harm trust and lead to regulatory penalties.

- Solutions: Businesses should prioritize transparency, informed consent, and fairness in AI systems while adhering to data protection laws like the CCPA.

AI & Ethics in Modern Commerce | Marc Stracuzza

Ethical Guidelines for Predictive AI in E-commerce

As predictive AI becomes a cornerstone of e-commerce, the need for clear ethical guidelines has never been more pressing. The rapid integration of these technologies has highlighted risks like eroding customer trust and potential regulatory penalties. But it's not just about avoiding pitfalls - ethical practices in AI pave the way for responsible systems that benefit both businesses and consumers in the long run.

Core Ethical Principles

To use predictive AI responsibly, businesses must prioritize transparency, informed consent, non-discrimination, and privacy protection [2].

Transparency means being upfront about AI usage and how decisions are made. It's no longer enough to hide behind dense privacy policies. Customers deserve clear, straightforward explanations of how AI influences outcomes, such as product recommendations or targeted ads. Adopting explainable AI tools can help demystify these processes, fostering trust and ensuring readiness for future regulations.

Informed consent requires obtaining explicit permission before collecting or using personal data for AI purposes. This involves creating privacy policies that are easy to understand and offering simple opt-out options. Customers should know exactly what they’re agreeing to and how their data will be used.

Non-discrimination and fairness are essential to prevent biased outcomes. AI systems often reflect the biases in their training data, which can lead to unfair treatment of certain groups. Regular audits, diverse training datasets, and human oversight are critical to minimizing these risks and ensuring equitable outcomes.

Privacy protection is a must, especially since predictive AI relies on sensitive personal data to analyze customer behavior. Measures like data encryption, restricted access, and anonymization are key safeguards. A 2024 Pew Research survey revealed that 72% of U.S. consumers are concerned about how their personal information is used in AI-driven marketing, underscoring the urgency of strong privacy protections.

Ignoring these principles can have serious consequences. Companies risk hefty fines, legal actions, and reputational damage. Unethical AI practices can lead to biased algorithms, data breaches, and exclusionary practices that disproportionately harm vulnerable groups. Moreover, these ethical priorities align closely with emerging U.S. regulations.

U.S. Regulatory Landscape

In the U.S., regulators are stepping up to ensure AI systems operate transparently and fairly. The Federal Trade Commission (FTC) has taken the lead, emphasizing the importance of transparency, accuracy, and fairness in AI while warning against deceptive or discriminatory practices. Companies must clearly disclose their AI usage and comply with consumer protection laws.

On the state level, the California Consumer Privacy Act (CCPA) has set a benchmark for privacy. The CCPA requires businesses to inform consumers about data collection practices, provide access to their data, and offer opt-out options for targeted advertising. As other states adopt similar measures, businesses face a growing maze of regulations.

To stay ahead, companies must embed data protection and transparency into their AI systems from the start. This includes securing informed consent, explaining AI decision-making clearly, and maintaining detailed records of data processing activities.

The regulatory shift is clear: consumers are gaining more control over AI-driven decisions, and transparency is becoming non-negotiable. Businesses that embrace ethical AI practices now will be better equipped to meet these regulatory demands, while those that lag behind may face disruptions that hurt their bottom line.

Platforms like Feedcast.ai are making it easier for businesses to align with these standards. They offer tools that respect privacy, provide transparent analytics, and enable unbiased targeting, helping companies stay compliant and build trust with their customers.

Case Studies: Predictive AI in Action

Real-world examples show how major retailers have tackled the ethical challenges of predictive AI while still achieving strong business results. These case studies reveal common hurdles and the practical steps taken to ensure AI implementation remains responsible and effective. They demonstrate how ethical safeguards are a cornerstone of successful AI use in retail.

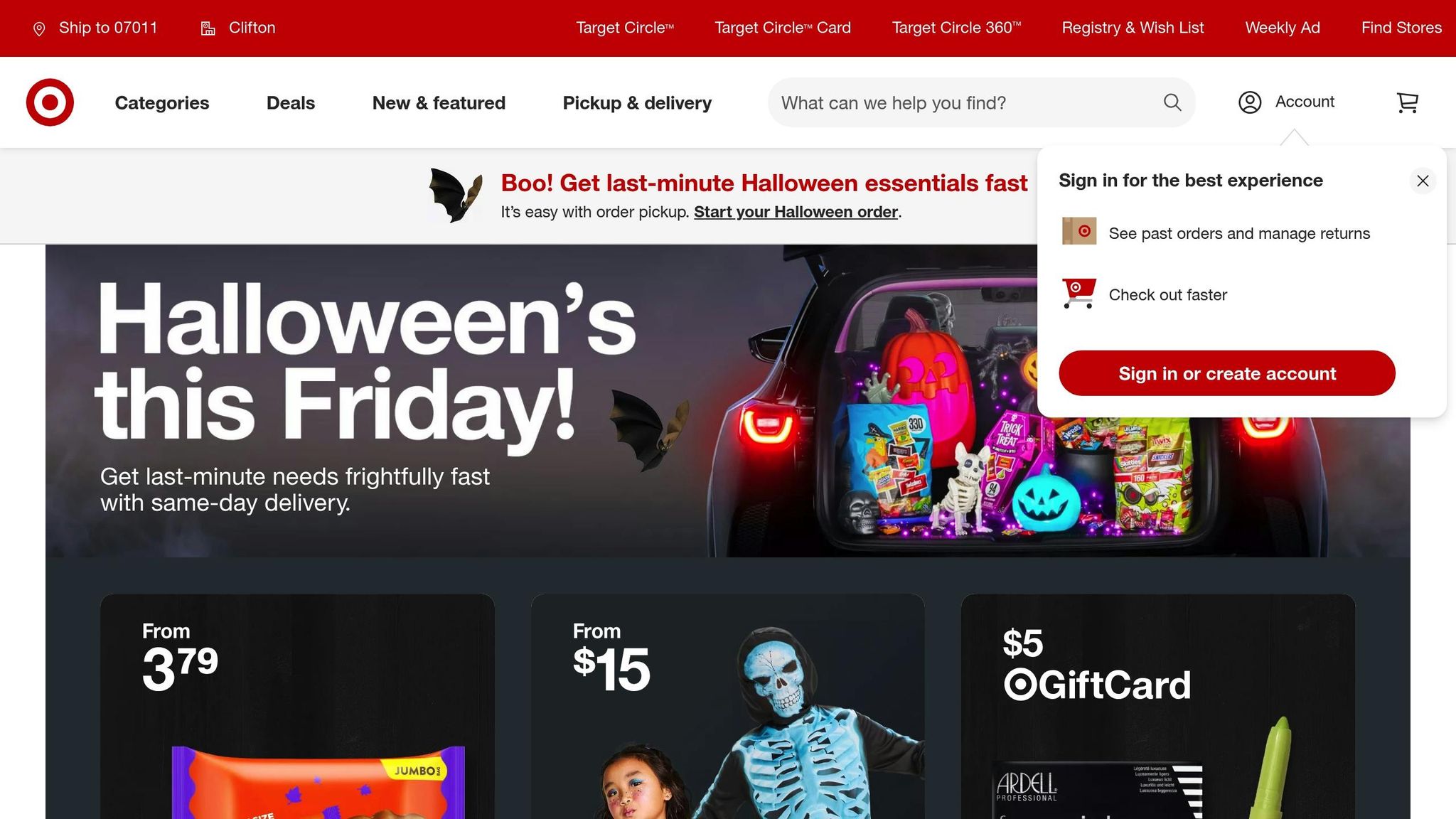

Case Study 1: Target's Personalized Marketing Model

Target’s pregnancy prediction model is a standout example of predictive AI in retail. By analyzing customers’ purchase histories and online behaviors, the system identifies expectant mothers, enabling the company to run highly targeted marketing campaigns for baby-related products.

However, privacy concerns quickly arose. To address these, Target took steps to safeguard customer data. The company now uses aggregated data to protect individual privacy, enforces strict access controls on sensitive insights, and provides clear opt-out options for customers who don’t want personalized promotions.

These changes have helped rebuild customer trust, fostering stronger long-term loyalty[1].

Case Study 2: Amazon's Recommendation Engine

Amazon’s recommendation engine powers personalized product suggestions by processing massive amounts of customer data. Using collaborative filtering and machine learning, it analyzes purchase history, browsing habits, product ratings, and shopping cart contents to predict what customers might want to buy next.

The complexity of this system, however, has led to a "black box" issue - customers often don’t understand why certain recommendations appear. To address this, Amazon has worked to make its recommendation logic more transparent. Customers can now review and adjust their data settings, giving them greater control over their experience.

These transparency efforts have not only improved customer satisfaction but also boosted product sales and profit margins[5][6]. Such examples pave the way for platforms like Feedcast.ai, which emphasize ethical AI in their operations.

How Feedcast Supports Ethical AI Practices

Building on the lessons from companies like Target and Amazon, Feedcast.ai prioritizes transparency and fairness in its AI-powered advertising tools. The platform addresses similar challenges through advanced data management and clear campaign transparency.

Feedcast.ai’s unified analytics dashboard provides businesses with a clear view of how AI algorithms make targeting decisions across multiple advertising channels. This helps companies better understand and communicate their automated marketing strategies. Additionally, its AI-powered data enrichment features include built-in error detection and data validation, ensuring that customer information stays accurate and up to date.

The platform’s smart targeting tools are designed to reach diverse audiences while maintaining algorithmic fairness. By centralizing multi-channel advertising efforts, Feedcast.ai ensures consistent privacy standards and effective consent management.

For smaller e-commerce businesses that may lack the resources to develop their own ethical AI frameworks, Feedcast.ai offers accessible tools to automate responsibly. Its transparent reporting and user-friendly dashboards make it easier for businesses to monitor AI-driven campaigns and ensure they align with ethical practices.

sbb-itb-0bd1697

Best Practices for Ethical AI in E-commerce

The experiences of major players like Target and Amazon highlight key lessons for using predictive AI responsibly. These real-world cases show that ethical AI can drive growth while protecting customers. To balance innovation with responsibility, focus on three critical areas.

Data Privacy and Consent

Ethical AI starts with strong data management practices. One essential principle is data minimization - only collect the information your AI models truly need. This reduces privacy risks and helps ensure compliance with regulations like the California Consumer Privacy Act (CCPA).

Past cases emphasize the importance of obtaining clear and explicit user consent [1]. Companies that prioritize transparency have seen trust restored and even improved business performance.

Adopting privacy-by-design practices is another critical step. This includes anonymizing data, limiting access, and conducting regular impact assessments. These measures not only protect customers but also shield businesses from potential legal complications.

Consent mechanisms should be straightforward and easy to use. For instance, Feedcast.ai provides a great example by clearly outlining its data practices and offering simple opt-out options [4]. Users can withdraw consent effortlessly through accessible links and detailed privacy policies.

Bias Prevention and Algorithmic Fairness

To prevent biased outcomes, start with diverse training datasets. When data is too narrow or reflects historical biases, AI systems are more likely to perpetuate discrimination.

Using explainable AI is another critical practice. Instead of relying on opaque "black box" systems, opt for models that offer transparency about how decisions are made. This is especially important when customers want to understand why they received a specific recommendation or ad.

Human oversight also plays a vital role in ensuring fairness. Regularly reviewing AI decisions - particularly in sensitive areas like credit approvals or personalized pricing - can help catch and correct issues early.

Shopify’s fraud detection system is a great example of ethical AI in action. By analyzing over 10 billion transactions, the system achieves a 99.7% safe order fulfillment rate while maintaining fairness for all customers [3]. This success stems from embedding fairness considerations into the system from the outset.

Clear Communication with Consumers

Transparency is essential for building trust. Make sure customers understand how AI is being used in your marketing and what data is being collected. Clearly label AI-generated content and explain how decisions are made, so users feel informed and included.

Privacy policies should be straightforward, offering customers simple tools to manage their data. For instance, explain data processing purposes in plain terms, such as "personalized advertising and content, advertising and content measurement, audience research, and service development" [4]. This clarity ensures customers know exactly how their information is being used.

Keep customers in the loop as your AI systems evolve. Share updates about new features, changes in data handling, and improvements in privacy protections. This ongoing communication demonstrates a commitment to ethical AI practices.

The success of these efforts can be measured through customer feedback, opt-in and opt-out rates, and monitoring for incidents related to bias or privacy. Companies that prioritize ethical AI often see stronger customer loyalty and engagement. These practices not only protect users but also set the stage for broader, responsible AI adoption across e-commerce platforms. By committing to ethical AI, businesses can achieve both trust and long-term success.

Conclusion: Building Trust Through Ethical Predictive AI

Ethical predictive AI isn't just a buzzword - it's a game-changer for businesses aiming for sustainable success. By focusing on transparency, data privacy, and fairness, companies can create strong, long-lasting relationships with their customers, paving the way for steady growth.

Take Target's pregnancy prediction model as an example. It highlighted both the incredible potential and the immense responsibility tied to predictive AI. The initial backlash over privacy concerns was a wake-up call for the industry: transparency and consent aren't optional - they're necessities from the get-go [1]. Target's response, which included bolstering data protection measures and improving transparency, proved that ethical corrections can rebuild trust.

Shopify's fraud detection system tells a similar story. By embedding fairness directly into its AI framework, Shopify achieved an impressive 99.7% safe order fulfillment rate [3]. This shows that ethical AI doesn't just protect customers - it delivers tangible business results.

For companies looking to embrace ethical predictive AI, the secret lies in choosing tools that prioritize responsible practices right from the start. Platforms like Feedcast.ai set a strong example with their clear data privacy policies, explicit consent mechanisms, and easy opt-out options. Their AI-powered data enrichment ensures accurate product representation, while a unified analytics dashboard keeps campaign performance transparent and measurable.

Ethical AI also provides a competitive edge. Businesses can stand out by adopting explainable AI, conducting regular bias audits, and ensuring human oversight for critical decisions. Partnering with certified platforms, such as Google CSS partners, helps ensure compliance with industry standards. These practices aren't just safeguards - they're the building blocks of future innovation in e-commerce.

The numbers don't lie: companies that proactively embrace ethical measures avoid regulatory pitfalls, strengthen customer loyalty, and enhance their brand reputation. As predictive AI continues to evolve, the businesses that succeed will be those that view ethical practices not as a limitation, but as a stepping stone to growth. The future of e-commerce belongs to companies that can deliver personalized, data-driven experiences while safeguarding privacy and staying transparent. By learning from industry examples and committing to responsible AI, businesses can build the trust that turns one-time buyers into lifelong customers.

FAQs

How can e-commerce businesses use predictive AI ethically to build customer trust?

E-commerce businesses have the opportunity to harness predictive AI in a way that respects ethical boundaries by focusing on transparency, fairness, and customer privacy. This means being upfront about how AI is implemented, ensuring algorithms remain unbiased, and protecting customer data at all costs.

Tools like Feedcast.ai can play a role in achieving these goals. By offering AI-powered solutions, such as ad campaign optimization and improved product visibility, it helps businesses stay competitive without compromising ethics. For instance, its AI-driven ad creation and performance tracking features allow companies to deliver relevant, effective advertising that meets customer expectations while building trust.

How can businesses reduce bias in AI algorithms to ensure fair treatment of all customers?

To tackle bias in AI algorithms, businesses need to focus on transparency and fairness during both development and deployment. This starts with using datasets that are diverse and representative of the populations the AI will serve. Regular audits of the algorithms are also crucial to spot and address any potential biases that may emerge. On top of that, establishing clear ethical guidelines can provide a framework for responsible decision-making.

Bringing together multidisciplinary teams - such as data scientists, ethicists, and experts from the relevant fields - adds another layer of oversight. These varied perspectives can help uncover and correct unintended biases. By following these steps, companies can build AI systems that treat all customer groups equitably while promoting trust and inclusivity.

What risks do e-commerce companies face if they use predictive AI unethically?

E-commerce businesses that misuse predictive AI can face serious consequences, including harming their reputation, losing customer trust, and encountering legal or regulatory challenges. Practices like relying on biased algorithms or mishandling customer data can spark public outrage, hurt sales, and even result in fines or lawsuits.

On the flip side, focusing on transparency, fairness, and responsible data practices doesn't just help avoid these pitfalls - it can also build stronger customer relationships and keep businesses ahead in a competitive market.

Geoffrey G.